Caching Strategies for Improved Performance in Backend Applications

Maximizing Backend Performance: A Deep Dive into Caching Strategies and Challenges

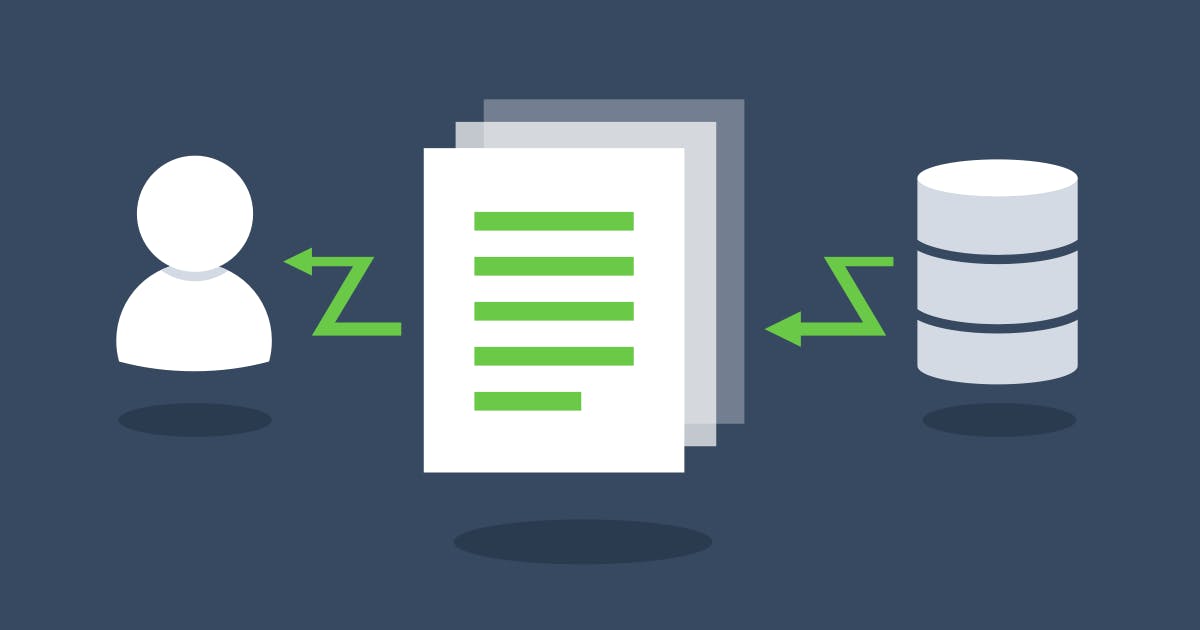

Caching serves as a critical component in the realm of backend applications, playing a pivotal role in enhancing performance and optimizing user experiences. At its core, caching involves storing frequently accessed data in a temporary storage location, thereby reducing the need to repeatedly retrieve information from the original source, such as a database or an external API.

Imagine you're browsing a website, and each time you visit a particular page, it takes a noticeable amount of time to load. This delay often occurs because the server needs to fetch data from its database or perform complex computations before rendering the page. Here's where caching steps in to save the day. By storing previously generated content or computed results, caching enables subsequent requests for the same information to be fulfilled swiftly, significantly reducing latency and improving overall responsiveness.

In this article, we'll delve into the caching strategies tailored specifically for backend applications. Our focus will be on elucidating various techniques and methodologies designed to bolster performance and efficiency in the backend ecosystem. Whether you're a seasoned developer seeking to hire back-end developers to fine-tune your existing caching mechanisms or a newcomer eager to grasp the fundamentals, this exploration promises to equip you with invaluable insights and practical knowledge.

Fundamentals of Caching:

To comprehend the nuances of caching, it's imperative to grasp its fundamental concepts and mechanics. At its essence, caching operates on a simple principle: storing frequently accessed data in a readily accessible location, thereby obviating the need for repetitive computations or data retrieval operations.

In essence, caching acts as a high-speed intermediary between the backend system and the client, intercepting requests for data and swiftly furnishing precomputed or cached responses. This process hinges on the utilization of a cache—a temporary storage mechanism that stores data in a format optimized for rapid retrieval.

Now, let's discuss the types of data ideally suited for caching. Not all data is created equal, and certain types are more amenable to caching than others. Generally, data that remains relatively static or experiences infrequent updates is deemed suitable for caching. Examples include static assets such as images, CSS files, and JavaScript libraries, as well as database query results and computed values derived from resource-intensive operations.

By leveraging caching intelligently and judiciously, developers can unlock a myriad of performance benefits, ranging from reduced server load and enhanced scalability to improved response times and heightened user satisfaction. In the subsequent sections, we'll explore various caching strategies tailored to address diverse use cases and scenarios, empowering you to optimize your backend applications for peak performance and efficiency.

Now that we've established a foundational understanding of caching, let's dive into some basic caching techniques commonly employed in backend applications.

In-memory caching:

In-memory caching represents one of the most straightforward and efficient caching mechanisms available to developers. As the name suggests, this technique involves storing cached data directly in the system's memory, typically within the application's runtime environment. By capitalizing on the lightning-fast access speeds offered by RAM (Random Access Memory), in-memory caching facilitates rapid retrieval of cached data, thereby minimizing latency and enhancing overall system responsiveness. Commonly used in scenarios where data volatility is low, and access speed is paramount, in-memory caching is well-suited for caching frequently accessed resources, such as database query results, session data, and frequently accessed files.

Client-side Caching:

Moving on, let's explore client-side caching, another fundamental caching strategy utilized in backend development. Unlike in-memory caching, which occurs within the server-side infrastructure, client-side caching involves storing cached data directly on the client's device, typically within the user's web browser. This approach leverages the browser's local storage mechanisms, such as the browser cache or web storage APIs, to cache static assets and resources, including images, CSS files, JavaScript libraries, and even entire web pages. By caching content on the client side, developers can reduce server load and network latency, leading to faster page loads and a smoother user experience. However, it's essential to note that client-side caching is subject to certain limitations and considerations, such as cache management issues and the risk of serving stale content to users.

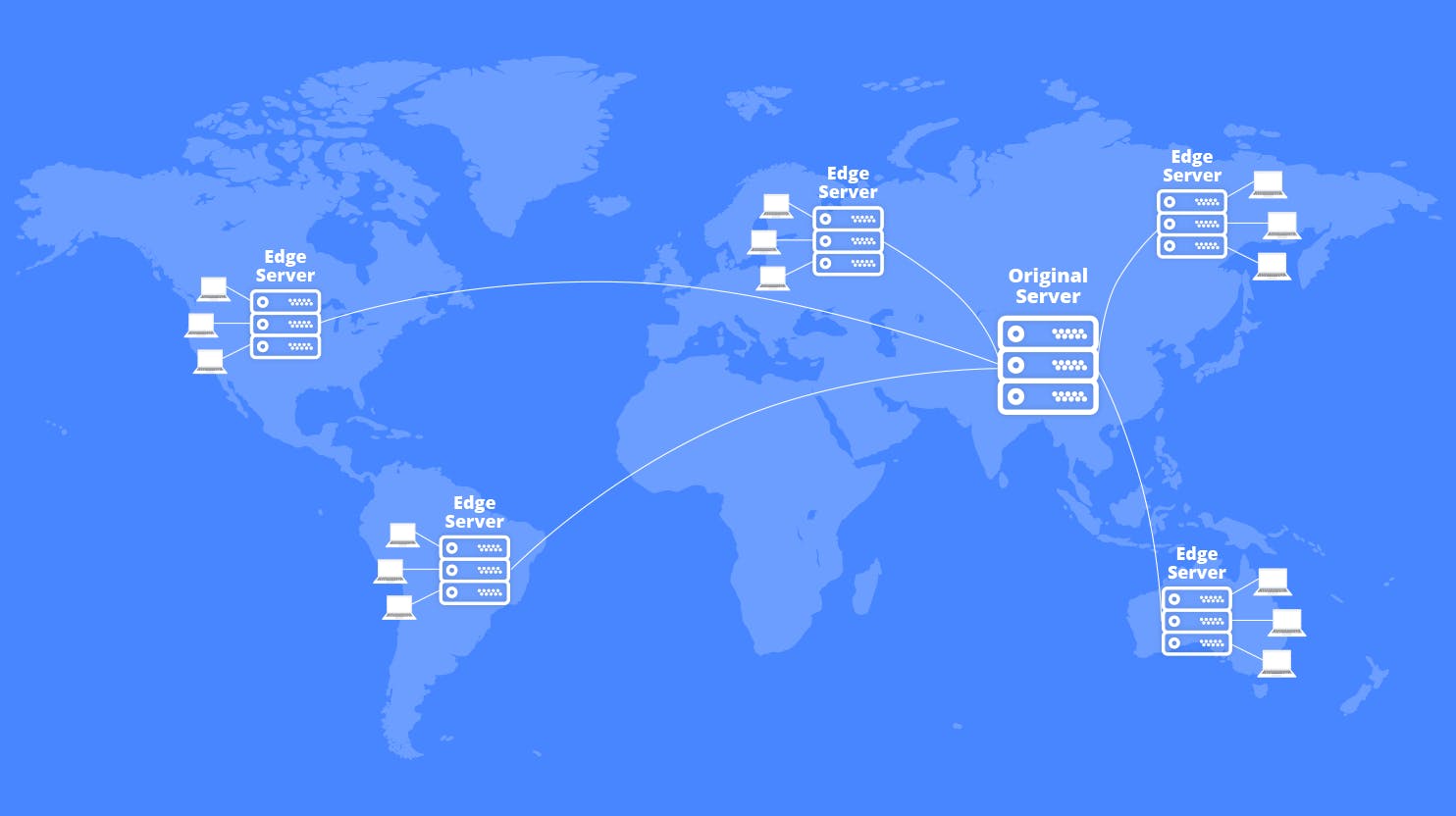

CDN (Content Delivery Network) caching:

Finally, let's discuss CDN (Content Delivery Network) caching, a ubiquitous caching technique employed to optimize content delivery and enhance global performance. CDN caching operates by distributing cached content across a network of geographically dispersed servers, known as edge servers or points of presence (PoPs). When a user requests content, the CDN automatically routes the request to the nearest edge server, which then serves the cached content, thus minimizing latency and reducing the burden on origin servers. CDN caching is particularly effective for delivering static assets, such as images, videos, and web scripts, to users worldwide, resulting in improved load times, enhanced reliability, and superior scalability. By integrating CDN caching into their backend infrastructure, developers can ensure optimal content delivery and an exceptional user experience, irrespective of geographical location or network conditions.

Advanced Caching Strategies:

As we delve deeper into the realm of caching, it's essential to explore advanced techniques that address nuanced challenges and maximize the efficiency of backend applications.

Cache Invalidation Techniques

Cache Invalidation Techniques represent a crucial aspect of advanced caching strategies, aimed at maintaining data freshness and ensuring that cached content remains relevant and up-to-date. Invalidation involves explicitly marking or removing cached items when their associated data changes, thereby preventing users from accessing outdated or stale information. Common cache invalidation techniques include time-based expiration, where cached items are automatically purged after a predetermined period, and event-based invalidation, which triggers cache invalidation upon specific data change events. By implementing robust cache invalidation mechanisms, developers can strike a delicate balance between performance optimization and data consistency, thereby enhancing the overall reliability and usability of their applications.

Cache Partitioning and Sharding

Moving on, let's explore Cache Partitioning and Sharding, advanced techniques employed to optimize caching performance and scalability in large-scale distributed systems. Partitioning involves dividing the cache into multiple segments or partitions, each responsible for storing a subset of cached data. By partitioning the cache, developers can distribute the workload evenly across multiple cache instances, thereby reducing contention and improving throughput. Similarly, Cache Sharding involves horizontally partitioning the cache across multiple nodes or servers, with each shard responsible for storing a distinct subset of data. This approach enables developers to scale caching infrastructure horizontally, accommodating growing workloads and maximizing resource utilization. By leveraging cache partitioning and sharding, organizations can achieve unprecedented levels of scalability and performance in their backend applications, effectively catering to the demands of modern, data-intensive environments.

Cache Coherency and Consistency

Lastly, let's go through the Cache Coherency and Consistency, fundamental concepts that underpin the integrity and reliability of cached data. Cache coherency refers to the synchronization of cached data across multiple cache instances or nodes, ensuring that all copies of a given data item remain consistent and up-to-date. Consistency, on the other hand, pertains to the guarantee that cached data reflects the most recent updates and changes made to the underlying data source. By implementing robust cache coherency and consistency mechanisms, developers can mitigate the risk of data inconsistencies and staleness, thereby fostering trust and confidence in their backend applications.

Implementing Caching in Practice:

Now that we've explored various caching strategies, it's time to discuss how to put them into action within real-world backend applications.

Selection and integration of caching strategies:

The first step in implementing caching is selecting the most appropriate caching strategies tailored to your application's specific requirements and use cases. This involves carefully assessing factors such as data access patterns, volatility, and scalability requirements. For instance, if your application predominantly serves static content, client-side caching may be the ideal choice to reduce server load and enhance user experience. Conversely, if your application relies heavily on dynamic data that frequently changes, a combination of cache invalidation techniques and in-memory caching may be more suitable to ensure data freshness and consistency. By strategically selecting and integrating caching strategies, developers can effectively optimize performance and scalability while minimizing resource overhead.

Performance monitoring and optimization

Once caching strategies are implemented, it's crucial to monitor and optimize their performance continuously. Performance monitoring involves tracking key metrics such as cache hit ratio, cache eviction rate, and response times to identify potential bottlenecks or inefficiencies. By leveraging monitoring tools and logging mechanisms, developers can gain valuable insights into cache utilization patterns and identify areas for improvement. Additionally, performance optimization techniques such as cache tuning, capacity planning, and load balancing can help fine-tune caching infrastructure to better align with application requirements and user demands. By adopting a proactive approach to performance monitoring and optimization, developers can ensure that caching mechanisms remain effective and efficient, providing users with a seamless and responsive experience across the board.

Challenges and Considerations:

As with any technology, caching comes with its own set of challenges and considerations that developers must address to ensure optimal performance and reliability in backend applications.

Overhead and trade-offs of caching

Firstly, let's dive into the overhead and trade-offs associated with caching. While caching can significantly improve performance by reducing data retrieval times and server load, it's essential to acknowledge that caching itself incurs overhead. Maintaining and managing cache infrastructure requires additional resources, including memory, CPU cycles, and storage space. Moreover, caching introduces complexity into the system architecture, potentially complicating data synchronization and consistency mechanisms. Developers must carefully weigh the benefits of caching against its associated overhead and trade-offs, considering factors such as data volatility, access patterns, and scalability requirements.

Handling cache failures and scalability challenges

Secondly, handling cache failures and scalability challenges poses a significant concern for developers tasked with implementing caching solutions in backend applications. Cache failures, such as data corruption, eviction, or expiration, can lead to inconsistencies and degraded performance if left unchecked. Implementing robust error handling mechanisms and cache validation strategies is crucial to mitigate the impact of cache failures and ensure data integrity. Additionally, scalability challenges may arise as the application's workload increases, necessitating the adoption of scalable caching architectures and distributed caching solutions. By leveraging techniques such as cache partitioning, sharding, and load balancing, developers can effectively scale caching infrastructure to accommodate growing demands and maintain optimal performance under varying workloads.

Conclusion:

In conclusion, caching strategies play a pivotal role in enhancing backend performance and scalability, but they also present challenges that must be addressed judiciously. Throughout this exploration, we've discussed various caching techniques, ranging from basic strategies like in-memory caching to advanced approaches such as cache partitioning and coherency. We've also examined the importance of monitoring and optimizing caching performance to ensure efficiency and reliability in production environments.

As we reflect on the key insights gleaned from this discussion, it becomes evident that caching holds immense potential for optimizing backend performance and improving user experiences. By embracing caching techniques and addressing associated challenges, developers can unlock new possibilities for scalability, responsiveness, and reliability in their applications. Moving forward, we encourage further exploration and experimentation with caching techniques, as they continue to evolve and adapt to meet the evolving demands of modern software development. Through continued innovation and implementation of caching best practices, we can collectively propel backend performance to new heights and deliver exceptional experiences to users worldwide.